QA for OBI (Part 2 – The Tests)

Posted by Jeff McQuigg

In the last post I discussed some general, overall topics to prepare you for a better QA cycle. In this post I’d like to get into the details a bit more to discuss some of the actual tests you can perform across both ETL and OBI layers. In particular I’m going to focus on data testing for ETL and OBI.

Data Validation Tests for ETL

Clearly the emphasis for ETL is on ensuring data is accurately moved from sources into the target while being transformed properly. This section will lay out some of the kinds of tests to consider writing for the various layers of your system.

Before getting into the specific tests, keep in mind the following aspects:

1. Single Hop: Think about writing separate scripts for each hop in your data architecture where data moves from station to station. If you have a staging or landing layer, write a test for it. If you have another layer moving on to your base tables, then write scripts for those as well. Even consider aggregate scripts – they are essentially a separate layer.

2. Multi Hop: Also factor in multi-hop tests such as a full blown source to final table, skipping over the intermediate hops mentioned above. Think of it as end-to-end data model testing. For simple routines perhaps some effort can be saved by focusing primarily on end-to-end skipping over some of the single hop tests.

3. Business Cases: As I mentioned in the previous post, there are code-to-spec tests and business case tests – run both of them during each QA cycle.

4. Automation: Attempt as hard as you can to automate these tests via SQL scripts – this will allow you to execute them with little manual effort, and do so more frequently. Follow the rule of Do once use many.

Attempt to code your scripts so that they only show you the problems and not all results. When done correctly, a large batch script file of dozens of tests can be run and the reviewer can find the results quickly. Keep in mind your degree of automation depends on whether you have a homogeneous or heterogeneous environment:

Homogeneous: When the source and target are in the same database you have a great leg up as you can let the database tell you where the problems are. For example, a simple test summarizing status codes between the source and the target may look like:

Select UPPER(Status_CD), Count(*) from Source.Table Group by Upper(Status_CD) Minus Select Status, Count(*) from Target.Table Group by Status; Select Status, Count(*) from Target.Table Group by Status Minus Select UPPER(Status_CD), Count(*) from Source.Table Group by Upper(Status_CD);

These two queries above will confirm that your status code summaries are correct across both places. In most scenarios you can do this from your staging or landing area to your actual final tables, or from your final base tables to your aggregates/performance layer.

Heterogenous: When you are dealing with different sources you will have to get more creative if you want to automate or partially automate. Some sort of 3rd party broker will have to perform the delta analysis on the results of two different kinds of queries. Here are a few ideas which are far from the only options:

- Have two sets of scripts attempt to produce the same output files, and use a file comparison utility to assist with finding differences. In the past I’ve successfully used BeyondCompare to assist with this task.

- For smaller datasets, use an ODBC database tool such as MS Access to run through the differences. You can paste database specific syntax into pass-through queries and even perform the minus operations in an automated fashion. Don’t get carried away though!

- Leverage a database gateway to make multiple databases appear as one. For linking multiple Oracle systems together you would use a database link, but for accessing a DB2 table in Oracle you might use an Oracle gateway.

5. Leverage database consistency abilities: This means mostly enabling Foreign Key, Unique and Not Null constraints in your DDL for the duration of development and QA. It is best to turn RI constraints off however for performance runs and production builds.

6. Incrementals and Full Loads: Do not forget about writing any specific test scripts for incremental loads. First off your original scripts should still hold after an incremental load (albeit with perhaps a minor modification). Write additional scripts to confirm incremental specific things such as snapshots and SCDs.

Consider creating test records in your source system, or perhaps identify existing records that can be used for special logic in the transformation. Keep in mind that a special case may not always appear in any data set; sometimes you will have to engineer the data to test the condition.

Specific ETL Tests:

- Row Counts: The bread and butter of data movement testing and the first thing to check. Keep in mind that dimensions should have an additional record or two for Unknown/Unspecified/Not Applicable, so subtract them from your row count query. Also factor in Fact snapshots – they will have a row count per period to check. SCD Type IIs will throw you for a loop as well so be careful.

- Allocations: For low cardinality fields that are heavily used, such as a PRIORITY_CODE or STATUS_CODE, confirm that the row counts/dollars/quantities match for each field. For fact tables, do not be hesitant to join to key dimensions for the breakouts.

Select STATUS, Count(*), Sum(TOTAL_AMT) from FACTS group by STATUS;

- Range Tests: Confirm that Min and Max values, as well as a distinct count of values match:

SELECT MIN(LTRIM(RTRIM(CUST_NAME))), MAX(LTRIM(RTRIM(CUST_NAME))) FROM TABLE;

SELECT COUNT(DISTINCT CUST_NAME) FROM TABLE;

- Transformation Validation: Usually the most difficult and time consuming piece to develop scripts for, these tests confirm that your basic logic is sound. You should factor in all of the following:

- Column transformations, usually IF-THEN-ELSE type of things, math formulas, etc.

- Null value replacements

- Lookups/Referential Integrity – make sure a) the lookup is correct and b) there are no nulls where there shouldn’t be

- Confirm SCD logic triggers properly, and effective date fields are tagged cleanly

- Aggregates match their base tables

- MetaData Columns: Not the most glamorous of tests, but depending on much you use such columns they may be critical to your incremental loads or even researching data issues. For BI Apps systems, new mappings will be the ones to focus on here as the OOB mappings should be in good condition.

- Test Records: Using a set of either random source records or engineered test data records, trace these records from the source through to the target, confirming the logic and transformations along the way. Try not to go overboard with these tests; pick about 10 records or so. This test is particularly useful for incremental testing.

- Anchor Metric Tests: This is an important one for the overall QA process and serves as a good transition from the ETL layer into the OBI layer.

Compare the results of your metrics from a SQL statement on the source system with the base metric from within OBI. This test is especially useful for the BI Apps, where the logic of the metric calculations, even in the BAW schema, can become a bit complex. OBI already understands how to calculate some of these metrics, including filtering out of various records. Focus here on only the base metric out of a metric set: for example, test # Promotions and not Avg. # Promotions, YTD # Promotions or Promotion Rate – those three examples are derived metrics and are best left to the OBI section. As we move into the OBI set of tests, these Anchor Metric Tests provide a basis for OBI QA.

Armed with a collection of script files that test both code-to-spec and desired business functionality, you will be able to confirm a great deal of you dataset ensuring an easier SIT process.

OBI Ad Hoc Testing

To many people, the best way to test OBI is a mystery. Where do you start? How far do you have to go? How far is too far? Are you sure I even need to do this kind of testing?

As a SQL generation engine, it may work just fine for your first 20 cases but on the 21st one it returns the incorrect result for some reason. In a fully ad-hoc environment, even with only a few tables, OBI can produce an impossible number of combinations to QA. Thus we have to strike some balance between QA effort vs. confidence in the model.

How to Test OBI & Automation

Before jumping into the goals and types of tests, I want to discuss a technique I’ve been using for years to help with the OBI model QA. It’s quite simple really: use OBI to make reports and save them in IT only QA dashboards. The great thing about having them in OBI is that they are always available to anyone on the QA or IT team that wishes to run them, whether it’s in SIT, UAT or even Production.

What to Test in OBI?

We should test a few things at the OBI level before moving on to our report and UI QA:

- Skeleton Accuracy: The skeleton refers to the main join paths between the tables on top of which sit the actual metrics and dimensional attributes. Think of metrics and attributes as the “Meat on the bones”.

- Derived Metric Accuracy: Using the base or anchor metrics as a starting point, are the OBI calculations using those metrics accurate? Are your Ratios, Time Series, formulas, etc. all working as designed?

- Data Security Model Accuracy: Logging in as a variety of users should help to confirm that the data filtering of the security model is working as designed. Additionally, access to subject areas should also be confirmed.

The OBI Tests

In this section I’ll go through the tests you should perform in OBI to make sure your Subject Areas are ready for ad-hoc consumption, and that you dashboard reports are constructed on top of a reliable Business Model.

Report Unit Test

First a word on OBI Report Unit test. Typically the OBI team doesn’t get involved with the data; they certainly do not know if values are incorrect or not unless they are wildly off (e.g., a Headcount of 500,000 for a mid level manager). Furthermore they will probably develop the report with incomplete or bad data as the ETL team is still in progress on the code.

What the OBI development team needs to confirm to the best of their ability is that the SQL that OBI generates for a given report is perfect. That means that while developing, the report’s SQL must be reviewed and thoroughly understood by both the RPD architect as well as the report developer. I’ve long said the following two things about Siebel Analytics/OBIEE and the importance of checking SQL: 1) The SQL is the Truth and 2) If it’s not perfect it’s wrong. Until the OBI RPD is thoroughly QA’ed, SQL should be reviewed at every opportunity to make sure it’s doing what it should be.

- Dimensional “Tree-Top”: These tests are quite simple really, and help to confirm an end-to-end perspective of your data model. Pick a base metric, such as a row count or simple $ amount, and create a set table view reports that break out the results by your dimensions. Included a grand total at the top of the report for easy confirmation. For example, for a sales system you may have:

- Total Sales $ by Year

- Total Sales $ by Quarter

- Total Sales $ by Month

- Total Sales $ by Product Category

- Total Sales $ by Customer Class

- Total Sales $ by Org Level 2

- Total Sales $ by Sales Channel

- Etc.

Your goal here is to test all of the physical tables are being use appropriately and that the results are the same across all of them. If your result for the # Units metric is 1,388,689, then it should be the same for all of the dimensions and levels in those dimensions (Year/Qtr/Month). Any lesser results indicate a missing FK, and overstated results might mean a duplicate in the dimension. Note you are not confirming that 1,388,689 is the right number; that is the job of the ETL tests. However if you know what the value should be that simply means you are more confident in your results. Think of the actual number as a variable from algebra; if the variable shows x, then it should show x everywhere, regardless of what the actual value of x is.

When building these tests, make sure that the grand total calculations are done by OBI and not via a 2nd total SQL statement – that defeats the point. You may need to set you aggregation rule to Sum() in the report to accomplish this. If not, exporting to Excel and totaling there is the best way around it, but of course that harms your automation.

You may also note some data problems when doing these tests such as null values or too many values being allocated to the Unspecified bucket. Furthermore, other strange things may appear to you just by looking at the results of the report – for example if all of your data is allocated to 2012 for some reason.

- Derived Metric Tests: Metrics are always a combination of ETL/Data Model and OBI. However some metrics are more OBI and others are more ETL. In this set of tests we want to validate any metric that is an OBI RPD created derivative of a raw or base or anchor metric. Using the example of Promotions from the ETL section, the ETL team has confirmed that the base or raw metric of # Promotions is accurate on the whole. A base metric is a simple one that is mostly derived by the ETL process, and OBI simply puts a Count() or Sum() rule on it – no calculations, CASE statements or Count Distinct() should be used. The tree top tests confirm dimensionality of the tables being linked together. The next thing is to confirm any OBI calc. is working as designed.

Just like before we’ll use OBI reports saved to a special QA dashboard so these tests can easily be re-run on demand across any environment. Our assumption for these tests is that the base or anchor metrics are accurate; these tests will use those as a starting point, and thus are relative to the base metrics. If they are not accurate, the ETL tests will catch the error. We are testing if a derived metric is supposed to be x + y then in fact it is, regardless of whether x or y is actually accurate.

As each metric is different, some creativity will be needed on the part of the test script author. In some cases each metric may need a couple of reports to confirm it, in some cases a download to Excel will be needed. Three examples:

Example#1:

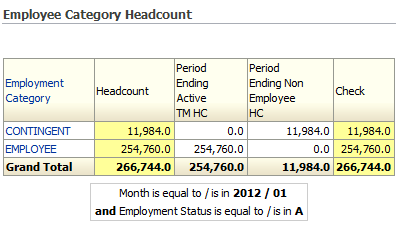

This table breaks out the two kinds of headcount – employee and contingent worker, and cross references the metrics and the dimensions. The first Headcount metric is the raw or base metric while the next two are derived and built in the RPD. The last column is a report level check simply adding up the two derived metrics. Note that I colored them yellow so as to draw attention that those two columns should be the same. (Remember, as BI people we use BI skills for QA in the same way you would your business users!) Also note that we expect the two zeros where appropriate – Employee headcount for the contingent worker row is what it should be.

Example #2:

This example is much more involved. The metric being tested is the average headcount, which is defined as the average of all of the current periods plus the end of the last period. Thus to check 2012 April, we add up 2012 Jan, Feb, Mar and April, plus Dec 2011 and divide by 5. The report on the right is the Average Headcount metric we’re trying to verify. The report on the left shows us the calculation, done in answers and filtered appropriately. Thus in this QA test, Answers logic was used heavily – with a RSUM() and RCount(), again based off of the base metric, to confirm the derived one. Finally, the subtitle text is a bit of help to anyone looking to verify this metric.

Example #3:

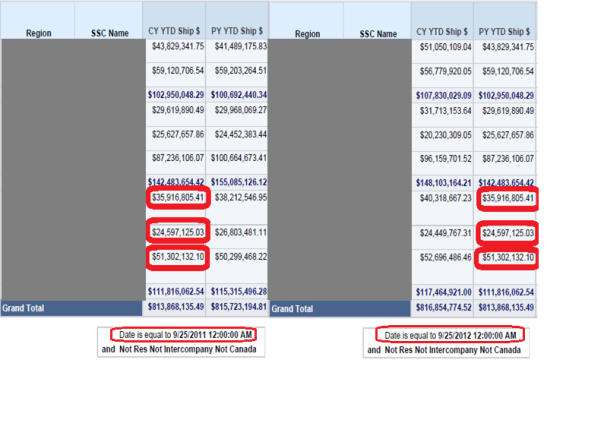

A very common one to build is a time series metric of Prior year – simply build two reports, one filtered for current year with the prior year metric, and another report filtered to the prior year with the current year metric. The Current Year report’s Prior Year metric should equal the Prior Year Report’s Current Year metric.

Keep in mind special kinds of metrics such as level-based metrics (Quarter Total Sales) and nested aggregations such as Last(Sum(Headcount)).

Help with Metric Testing:

In order to make QA more efficient for testing these metrics, especially when done by a 3rd party QA group not involved with the design discussions, deploy good design documentation practices. One in particular that helps the derived metric test out is to document metric definitions by re-using other metrics and dimensional attributes as much as possible.

In the table below I’ve made sure to reuse anything I possibly can, always referring to the eventual business names that we will build. As the document that contains your definitions also captures your final business names that appear in your Subject Area, you are effectively showing logic reuse. This object reuse shows a clear dependency of metric to one another, thus making the job of the QA script writer that much easier. Note also this is a great thing to communicate cleanly to business users what it is you are building and where the base logic of several metrics are identical.

| Metric Name | Definition |

| Headcount | Raw headcount from the data model |

| Active Headcount | “Headcount” where “Employee”.”Status” = ‘Active’ |

| Active Manager Headcount | “Active Headcount” where “Employee”.”Manager Flag” = ‘Y’ |

| Avg. Active Headcount | (“Active Headcount” for the period selected

+ “Active Headcount” for the end of the period before the range selected) / (“# Months” in the range + 1) |

- Derived Dimension Tests: Similar in intent to Derived Metrics tests but far easier and less involved are the testing of any dimensional attributes created in OBI. Think of this as confirming string concatenations – you’ll need the base input columns to show the data on the report.

- Multi-Dimensional Tests: These tests confirm that when you include many dimensions on a query that OBI chooses the right tables and performs complex calculations correctly. This is really the open-ended side of OBI ad-hoc testing- how many combinations should you try?

Ultimately my best recommendation here is to defer these tests until you get to the reporting validations. Covering your subject area with a wide variety of reports that all do a variety of things, have different grains, combinations of metrics, differing dimensionality, etc. is your best bet. Specific tests may be undertaken here, but they will be far from complete.

In reality, as OBI is such a black box to an end user, I strongly recommend an OBI Architect be involved with these queries to determine that they are in fact the correct queries. During the course of report development the SQL for each report should be reviewed to ensure OBI is doing what it is supposed to be doing – using aggregates when it should, not including extra tables or extra queries, that group by’s make sense, that subtotals and report totals are done cleanly. As reports are being completed and unit tested on a dashboard, the developers should know the SQL beforehand and confirm that the correct SQL is being generated at all times. If it works fine until you add a new prompt filter, then you have found a problem.

Of course building additional tests to supplement the dashboard reports doesn’t hurt; just pick a variety of row headers, metrics and filters and confirm the results are still as intended.

- Security Model Tests: If everything above is looking good, the next layer to add to the solution is the security model. For this piece I’m not concerned about dashboard visibility and things like that – just in data sets based on row filtering. Log in as a variety of different users, confirm that various security settings are being picked up correctly, whether it’s a UserID, a region, a Customer_ID or similar. Then it will be necessary to review the SQL to confirm that the query is applying the filter correctly. Again, OBI is a black box. But if you can confirm the SQL is perfect, and it’s on top of a functioning data model and OBI ad-hoc, then you will be in very good shape. You’ll still need to confirm the numbers in a report, but at least you know the security model is doing what it should be doing.

Of course if numbers are available for base metrics for different users, then by all means confirm them at this stage before getting into the reports. Anything to help isolate a problem will be useful.

OBI Reports & Dashboards

This is one area at first glance sounds to be pretty easy – confirming that the reports are correct, but when you look into it a bit more it turns out not always to be so straightforward. Based on what you have to compare to and who is doing the comparison the kinds of tests you are able to execute will differ. A few things to have in your QA plan:

- Interactions: This is the easy stuff: do drill downs work, do the page prompts work properly with the reports, does it navigate to where it’s supposed to, etc. This is the kind of tests that an internal QA group is more familiar with, as in many ways it is similar to testing a web application. The one key piece of data validation that can be performed at this time are on drilldowns and navigations. If your dashboard report shows 25, when you click on that number to go to another page or list/details report, your result should still be 25.

- Report Construction: Compare what the report looks like with the design specification. Check the columns, sorting, filtering, naming, layouts, colors, etc. The difficulty in doing this will vary based on how detailed the design specs are of course.

- UI Standards: Taking a cross functional view, match the pages to the UI Design Standards document if it exists. Are colors as they should be? Are various options as per Standards? Are charts formatted per the document, etc. This is a simple test, again assuming the standards are written down in a document.

- Relative Data Values: In cases where you have a QA team that is unfamiliar with the functional pieces and is not involved with data QA, you can still perform a set of valuable tests focusing on what is in the report, relative to other things in the report. In other words, the QA team may not know that the Sales $ is correct or not, but if the is a % change from last year, they can certainly validate those numbers work relative to the base metric in the report. Recall the algebra concept mentioned before. A QA team should be able to get this year’s numbers, separately get last year’s numbers, perform the % difference calculation in Excel and verify the derived metric is correct. Some cleverness is needed here; the numbers needed may not always be in one report – sometimes the base numbers may be in different reports on different pages.

- Base Report Numbers: This is the hard part – confirm the base metrics in a report based on all of the layers of the OBI solution, including the report filtering, security model, OBI RPD and ETL. Based on your data, environment and what is available for you to use, you will have to find some way of confirming that a base amount in the report is accurate. To this point we’ve confirmed the ETL, the base metric is accurate in OBI, the derived metrics are accurate, and even the report fields match up relative to each other. But what remains is whether or not the base metric in the report is accurate considering the report design (filters mostly) and the security model. If Jane Doe’s Headcount for August is supposed to be 941, does it show 941 in the report for her for that time period?

This is where you’ll have to do some hard work to figure out if that number is indeed accurate. It may mean looking up the value in a source system to confirm; it may mean a data dump and record-by-record comparison, or it could be comparing to another legacy report and possibly accounting for any differences.

- Security: Basic object visibility and permission/privileges are pretty straightforward to test – does an account have access or not? Let the detailed security model and Web Catalog designdrive the test cases and scripts here.

Summary

I’ve laid out a lot of different kinds of tests to consider – use what pieces you can based on your own unique project situation. Remember to go layer-by-layer, but also try to do some end-to-end testing to make sure you have the confidence that your steps are linked together properly. Put a heavy focus on automation and re-use; QA is typically a stress filled time on the project, and saving the teams time will prove invaluable. Finally, when planning your QA process, remember it’s all about the build cycles and the environments you have available to facilitate them.

Posted on October 31, 2012, in BI Theory and Best Practices, OBI EE Development and tagged Ad-hoc, OBI, QA, SIT, Testing, UAT. Bookmark the permalink. 2 Comments.

Another excellent post.. one section that I’d like to address is this sentence: ” Furthermore they will probably develop the report with incomplete or bad data as the ETL team is still in progress on the code.” I’ve seen this become a real problem on projects when the project schedule calls for developing reports too early in the development process. Developers end up spending significant time creating “fake” data to write simple reports that would take less time if the data was available. If it takes more time to create the dummy data than it does to create the report, development should be pushed off until the real data is ready. You’re going to have to retest the report anyway once the data is correct.

Yes Doug that is indeed a good topic. On a recent project we had to do that extensively. In fact we started with Excel mockups, then switched over to OBI as soon as we could, made fake things in the RPD for Design phase and then waited for the real data to show up for Dev. Of course it wasn’t that great in the beginning but it got better.

I have another post on Prototyping here: https://greatobi.wordpress.com/2011/07/06/prototyping-with-obi/

The benefit to doing design in OBI as early as possible is that your users get to see exactly what they will get, and if they ask for a feature, you can actually try it out to see if it will work or not. I hate using Excel mock-ups for OBI requirements, as things don’t always work correctly in any tool being translated from Excel or Wireframes.

Here is an old one on that topic: https://greatobi.wordpress.com/2009/08/24/using-report-specs-for-requirements/

Jeff M.